Because of this, time needs to be permitted to establish targets, analyze current screening procedures, and also develop the best application team before launching an ETL examination automation job. As the quantity as well as rate of data rise, ETL procedures need to scale appropriately. Performance optimization ends up being an obstacle, especially for real-time ETL processes. The chosen ETL devices and also the underlying facilities demand to be able to deal with the information lots successfully. As the amount of data expands, protecting data comes to be important. Enterprises with information stores and also handling huge quantities of data need to make sure the safety and security of the stored data and remaincompliant with lawful needs.

Data Pipeline Tools Market Size To Reach $19 Billion by 2028 - RTInsights

Data Pipeline Tools Market Size To Reach $19 Billion by 2028.

Posted: Sat, 20 May 2023 07:00:00 GMT [source]

Slow processing or sluggish end-to-end efficiency triggered by large information volumes can influence data precision and also completeness. You can currently progress with ETL recognizing that your information high quality is strong. Data integration enables you to transform structured as well as unstructured data and deliver it to any type of system on a scalable big data system.

Action 4: Recognition

Though a standard procedure in any kind of high-volume information setting, ETL is not without its own difficulties. Information is usually untidy and filled with errors; ETL testing requires clean, exact data to have healthy and balanced outcomes. Record screening reviews data in recap report, verifying layout and also capability are as anticipated, and also makes estimations. Metal testing carries out data kind, size, index, as well as constraint checks of ETL application metadata. Held by Al Martin, VP, IBM Specialist Providers Distribution, Making Information Simple provides the most recent thinking on large data, A.I., and also the implications for the enterprise from a variety of experts.

- Data virtualizationVirtualization is a dexterous technique of blending data together to create a digital sight of data without moving it.

- This includes using service policies, information cleansing techniques, data recognition, as well as aggregation procedures to guarantee precision, information top quality, and honesty.

- This is not the instance with ETL; the transformation gets on a various web server.

- For that reason, if a tester does not comprehend the demands and the layout of ETL, they are bound to make defective examination situations that can compromise the data top quality.

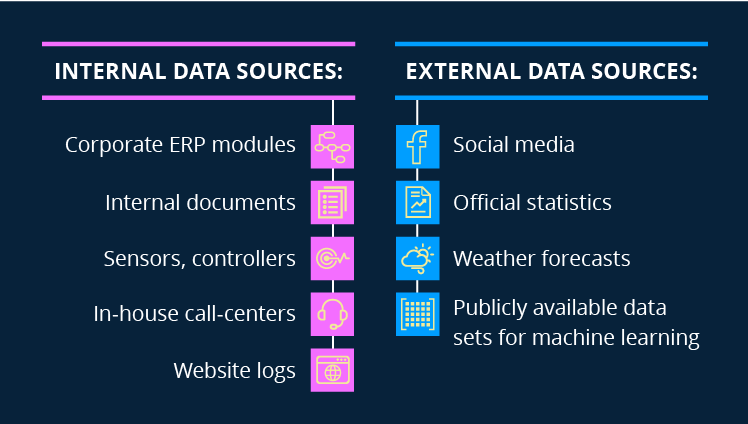

Their scalability gives groups the versatility to adjust data pipelines, include brand-new information sources, and customize transformations as required. IBM provides numerous data combination services as well as remedies created to sustain a business-ready data pipeline as well as provide your enterprise the tools it needs to scale successfully. IBM, a leader in data integration, offers ventures the self-confidence that they require when managing huge data tasks, applications, and machine learning modern technology. In today's business setting, organizations usually use numerous software program applications, relational databases, and also systems, each with its https://atavi.com/share/w4gs1fz12f9t6 own information style and framework.

Etl Testing Devices

Completion outcome is an integrated view of organization data, which can be extremely valuable for obtaining all natural understandings into service operations and also performance. ETL has actually developed to support integration throughout far more than typical information storehouses. Advanced ETL The original source devices can pack and transform organized and also disorganized information right into Hadoop. These devices review and create numerous files in parallel from as well as to Hadoop, simplifying how information is merged right into a typical transformation procedure. Some services integrate libraries of prebuilt ETL improvements for both the transaction and also interaction information that run on Hadoop. ETL likewise supports assimilation across transactional systems, operational data shops, BI systems, master data monitoring centers and also the cloud.

How to automate data quality processes - TechRepublic

How to automate data quality processes.

Posted: Fri, 21 Oct 2022 07:00:00 GMT [source]

ETL devices are applications/platforms that allow users to carry out ETL procedures. In straightforward terms, these tools help services move information from one or several disparate data sources to a location. These aid in making the information both absorbable and also accessible (and also in turn analysis-ready) in the preferred location-- commonly a data storehouse. By verifying data quality across distributed data sources and also making use of data profiling tools to https://www.instapaper.com/read/1614706743 locate information high quality issues, this approach can be increased to large information monitoring. The changed information in a data warehouse promotes easy as well as rapid access to the information.